George Soros, Reflexivity and Market Reversals

"No matter how complex, the underlying basis of almost every economic theory is that markets search for prices that create a balance between supply and demand. Consequently, when all participants act rationally, free markets and the economy are stable.

George Soros does not agree.

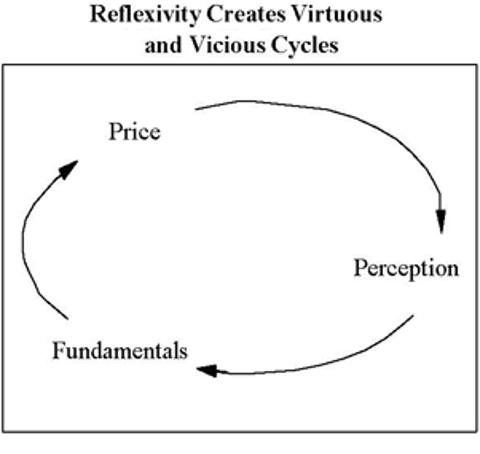

His theory of reflexivity suggests that, sometimes, markets are inherently unstable. The underlying forces create negative feedback loops that cause prices to diverge wildly from equilibrium. Reflexivity helps explain why this happens and is the philosophy that he uses to identify these unstable environments. When prices move to the extreme, he bets on a reversal. As evidenced by his investment track record, Mr. Soros has applied his theories with great success.

In this paper, we examine the ideas behind reflexivity and discuss how they result in parabolic price patterns. The belief that markets simply tend to overshoot in search for equilibrium is inadequate. When destabilizing forces take hold, businesses, industries and financial markets move along a relentless path away from equilibrium, sometimes creating a virtuous spiral of prosperity, and other times a vicious cycle of economic destruction.

...

Mr. Soros calls it his life’s work, and has written several books1,2 on the topic. Even so, he admits to receiving as much criticism as praise for his theories on the economy and financial markets. In a 1994 speech3, Soros attempted to explain his concept of reflexivity in the following statement,

“There is an active relationship between thinking and reality, as well as the passive one which is the only one recognized by natural science and, by way of false analogy, also by economic theory. I call the passive relationship the “cognitive function” and the active relationship the “participating function,” and the interaction between the two functions I call “reflexivity.”

Reflexivity is, in effect, a two-way feedback mechanism in which reality helps shape the participants’ thinking and the participants’ thinking helps shape reality in an unending process…”

Wow. Clearly his talents as a practitioner are superior to his ability to explain his ideas, and enlighten others. Our goal in this paper is to simplify and clarify. We will even be so bold as to draw some conclusions about the current market environment based on reflexivity."

http://seekingalpha.com/article/126165-george-soros-reflexivity-and-market-reversals

Philosophical Speculation...I think of "utility" as conceptual/semantic, the means/medium of expressing/exchanging it would be grammatical/syntactic, if there were a way to find "information preserving transformations" which nearly accurately preserve intrinsic interpretations of value(s), in spite extrinsic differences in representation...there would be no need to establish a uniform global currency, merely a reliable method of translating between relative/absolute notions of utility.

Mission

The Future of Humanity Institute’s mission is to bring careful thinking to bear on big-picture questions about humanity and its prospects. The Institute’s work focuses on how future technology might affect fundamental parameters of the human condition, the risks and opportunities involved, and the epistemic, moral, and prioritization issues that confront actors who pursue long-range global objectives. We currently pursue four interlinked research programs:

- Global catastrophic risks: What are the biggest threats to global civilization and human well-being? How can the human species survive the 21st century?

- Human enhancement: How can medicine and technology be used to enhance basic biological capacities, such as cognition and lifespan? Can enhancement be ethical and wise?

- Applied epistemology and rationality: How can we make better decisions under conditions of profound uncertainty and high stakes? How can we reduce bias and human error in our decision-making?

- Future technologies: What would be the impacts of potentially transformative technologies such as advanced nanotechnology and artificial intelligence?

Despite the great theoretical and practical importance of these issues, they have received scant academic attention. FHI enables a few outstanding and creative intellects to work on these pivotal problems in close collaboration. Our goal is to pioneer research that demonstrates how such problems can be rigorously and fruitfully investigated.

The Institute combines world-class philosophical expertise with strong multidisciplinary scientific capability. Founded in 2005, the Institute has established itself as the world leader in its fields of research. The Institute has previously, successfully worked on several closely related subjects and has pioneered quite a few ideas and approaches of relevance to the present Programme, including, inter alia, foundational work on existential risks, the concept of crucial considerations, the whole brain emulation roadmap, meta-level uncertainty in risk assessment, fundamental normative uncertainty, observation selection effects, human enhancement ethics and the reversal test, the singleton hypothesis, global catastrophic risks, and some approaches to machine intelligence safety analysis.

Our research staff is drawn from a variety of fields, including physics, neuroscience, economics, and philosophy. Several of us have an academic background in more than one discipline. We use whatever intellectual tools we judge most likely to be effective for the specific problem at hand, often combining the techniques of analytic philosophy with those of theoretical and empirical scientific inquiry.

FHI also works to promote public engagement and informed discussion in government, industry, academia, and the not-for-profit sector."

"Utility is taken to be correlative to Desire or Want. It has been already argued that desires cannot be measured directly, but only indirectly, by the outward phenomena to which they give rise: and that in those cases with which economics is chiefly concerned the measure is found in the price which a person is willing to pay for the fulfilment or satisfaction of his desire. (Marshall 1920:78)"

http://en.wikipedia.org/wiki/Metagaming

Epistemic Game Theory: Beliefs and Types

http://faculty.wcas.northw

Harsanyi's Utilitarian Theorem: A Simpler Proof and Some Ethical Connotations:

http://www.stanford.edu/~h

Type spaces and conceptions of utility are intimately linked. Developing sensitivity to local contexts will have to be considered by future informatics-based commerce. The Internet is paving the way for such "interoperability" standards, the semantic conception of utility is subject to "more is different", as is any exchange regulated form of interaction.

...

The most important benefit of derivatives is the ability to manage market risk, i.e. to lower the actual market risk level to the desired one. This task of minimizing or eliminating risk, often called hedging, means that derivatives can safeguard corporates and financial institutions against unwanted price movements.

...

A second essential function fulfilled by derivatives is price discovery, allowing investors to trade on future price expectations. By trading in derivatives, investors effectively disclose their beliefs on future prices and increase the amount of information available to all market participants. In this way, derivatives enhance valuation and thereby allocation efficiency."

http://www.world-exchanges

"Cybernetics, as we all know, can be described in many ways. My cybernetics is neither mathematical nor formalized. The way I would describe it today is this: Cybernetics is the art of creating equilibrium in a world of possibilities and constraints."

...

"The theory of interconnectedness of possible dynamic self-regulated systems with their subsystems"

...

"Today's cybernetics is at the root of major revolutions in biology, artificial intelligence, neural modeling, psychology, education, and mathematics. At last there is a unifying framework that suspends long-held differences between science and art, and between external reality and internal belief."

...

"The word was first used by Plato to mean 'the art of steering' or 'the art of governing'. It was adopted in the 1940s at MIT to refer to a way of thinking about how complex systems coordinate themselves in action: "the science of control and communication, in the animal and the machine", as Wiener put it. Cybernetics was originally formulated as a way of producing mathematical descriptions of systems and machines. It solved the paradox of how fictional goals can have real-world effects by showing that information alone (detectable diffferences) can bring order to systems when that information is in a feedback relation with that system. This essentially bootstraps perception (detection of differences) into purpose.

In broad terms, cybernetics incorporates the following three key ideas: systemic dynamicity; homeostasis around a value; and recursive feedback."

...

"First order cybernetics: The cybernetics of observed systems.

Second order cybernetics: The cybernetics of observing systems."

...

"the study of justified intervention"

http://www.asc-cybernetics.org/foundations/definitions.htm

Isonomia — equal law — is the historical and philosophical foundation of liberty, justice, and constitutional democracy. Aristotle considered it the core ingredient of a civilization that seeks to promote individual and societal happiness. First ordained by the ancient Athenian lawgiver Solon (c. 638-558 B.C.), isonomia was later championed by the Roman Republic's finest orator, Cicero; but it was subsequently eclipsed for a millennium, until (in effect) "rediscovered" in the eleventh century A.D. by the founders of the Western Legal Tradition, the law students of Bologna who synthesized the Greek genius for systematic thought, the Roman genius for pragmatic administration, and the medieval Judeo-Christian-Islamic preoccupation with the "uses" of faith and reason to secure a common humanity under a common deity.

Empathy, Liberty, Equality. These concepts are often linked, a triune "much of a muchness"; there is a good reason for this; it is rooted in the history of the idea of isonomia, the parent of demokratia.

Our most precious legacy is our understanding — originating in our evolving capacity for empathy — that we must be equal in our liberties and hence equal in the restraints upon our liberties.

We must be equal under the law, and hence equal in the making of laws. Liberty and law are coevolving, and any "constitutional democracy" worthy of this oh-so-precious name must reflect that sequence of foundational ideas.

I believe that Socrates' metaphor captures and "domesticates" the deep wisdoms of that ancient Greek trinity of ontology, epistemology, and teleology; he bends philosophy to his will, to human purposes, in ways that still ring true.

The art of the helmsman requires integrating knowledge of the changeless stars with knowledge of the naturally-changing — the winds and waves of circumstance — in order to choose, and act, and react, with reference to the angle of the rudder, the trim of the sail, but more ... to change these (a) in relation to each other, for each affects the other, and (b) in relation to achieving an ultimate goal, such as safe passage across open seas to prosperous harbors.

This is the art of cybernetics — goal-focused governance that cultivates and harvests feedback, continuously monitoring "progress" in light of hierarchies of facts and values, including ultimate objectives. Law is the quintessential cybernetic calling."

http://www.jurlandia.org/isonomia.htm

"The Letter of the Law is the literal meaning of the law (the actual wording of the law in the original text). The Spirit of the Law is the intent or purpose of the law (what the law-maker intended the law to do)."

http://en.wikipedia.org/wiki/Letter_and_spirit_of_the_law

"All levels of analysis from action theory to moral philosophy, from moral philosophy to practical reasoning, from practical reasoning to doctrinal considerations have in common an underlying teleological structure in terms of what goals are at stake, and how they are ordered. This teleological structure is isomorphic for all the above levels of analysis. This structure and this structure alone is the final arbitrator of legal doctrine, whether it is right or wrong, and whether it is correctly or incorrectly applied to a specific event. ... A structural teleological analysis of the law of tort will reveal that the goals of freedom of action and the prevention of physical harm to persons and property often come into conflict in the context of individual action, and consequently must be ordered against each other. ... Through human experience in the context of human action, we learn what we value and how we order values when they come into conflict such that we must choose one or the other. We then develop moral justificatory practices and practical reasons which we generalize as policies to justify the imposition of our value structure. These in turn are reflected in legal doctrine. There is thus an isomorphic teleological structure in terms of goals and their ordering which underlies legal doctrine. The prima facie duty doctrine, limited by a test of proximity, negates the common sense, empirically-based distinctions between physical injury to persons and property and pure economic loss, and the distinction between causing harm and failing to prevent it from happening. This doctrine runs contrary to the deep teleological structure of ordering of values which comes out of human experience.

The Yale Law Journal recently published a piece entitled The Most-Cited Articles from the Yale Law Journal. Nearly all of the named articles entailed a teleological analysis of law or an area of law in terms of what I have been calling "deep structure." Why is it that the kinds of analysis which lawyers, students, academics and judges find the most interesting seldom appears in legal argument or in the text of legal judgements? The reason in simple. The information costs of proving claims made in terms of policy and practical reasons are too high to be feasible in a court of law.

At the level of analysis of human action we recognize that there are many actions which everyone does most of the time. Because they are performed by a lot of people a lot of the time, limitations on such actions place limitations on our freedom to act. The risks of physical harm to persons or property fall reciprocally on nearly everyone. We are prepared, therefore, to impose no higher standard of care than that of reasonableness. Other actions, however, are done only by particular persons, on particular occasions, or in particular places. The risks of such actions are, therefore, non-reciprocal, and we can limit them to a greater degree without interfering with freedom of action in general. This set of goals and their ordering is isomorphically reflected in moral justificatory theories of fault and causation or strict liability. At the level of doctrine it is reflected in rules such as that of Rylands vs. Fletcher." The teleological structure thus runs through all levels of analysis from action theory, moral theory and practical reasoning to doctrinal structure. If one takes most of the analyses of the most cited articles of the Yale Law Journal, one will see that the authors have identified a teleological structure which is isomorphic for the underlying philosophical, economic, practical, and doctrinal levels of the subject matter with which they are dealing."

The Deep Structure of Law and Morality:

http://www.utexas.edu/law/journals/tlr/abstracts/84/84kar.pdf

Utility maximization, morality, and religion

"John Rawls (1958, 1971) proposed two principles for creating a just society: (1) each person should be allowed the maximum amount of liberty that is compatible with everyone else having the maximum amount of liberty and (2) inequalities should be allowed only if it is reasonable to believe that the inequalities will be most beneficial to the least well off. The first of these principles takes precedence over the second, and the first requires that everyone's "liberty" be considered equally.

Joseph Fletcher, who developed situation ethics, said that when I make a choice, it should be the most loving option that I have (Thompson 2003, 51-52). Love requires that I place others on at least an equal basis with myself.

The categorical imperative, as developed by Immanuel Kant (1927a, b), requires two things: (1) that I make only choices that I could change into universal laws for everyone and (2) that I treat other people only as ends, and never as means. Changing choices into universal law would result in giving equal weight to everyone. The second of these rules is logically inconsistent with utility maximization because, according to utility theory, everything I do is a means to increase my own utility.

For example, utility theory would say that my giving money to a homeless person is done to increase my own utility, which implies that I am treating the homeless person as a means, not an end. All of these ethical theories directly imply that, to be moral, I must (as a necessary but not always a sufficient condition) place everyone else on the same level as myself when making choices. In contrast, utility maximization, as taught by economists, places 100 percent of the emphasis on the chooser's utility.

Under utility maximization, I will do things that increase the utility of others if and only if that increases my utility. My utility is primary. Most important, it is theoretically impossible to imagine everyone having utility functions in which everyone else's utility has a weight equal to the chooser's utility because such utility functions would be infinitely recursive. This does not mean that it is impossible to be moral.

Instead, being moral requires that I use rationality to separate my thinking from my own utility function. If I can divorce my thinking from my own utility function and view everyone on an equal basis, then I am more likely to make choices that produce the maximum good for the maximum number of people. Furthermore, then I can make choices that treat people as ends, not means."

http://findarticles.com/p/articles/mi_qa

"The Human Use of Human Beings is a book by Norbert Wiener. It was first published in 1950 and revised in 1954.Wiener was the founding thinker of cybernetics theory and an influential advocate of automation. Human Use argues for the benefits of automation to society. It analyzes the meaning of productive communication and discusses ways for humans and machines to cooperate, with the potential to amplify human power and release people from the repetitive drudgery of manual labor, in favor of more creative pursuits in knowledge work and the arts. He explores how such changes might harm society through dehumanization or subordination of our species, and offers suggestions on how to avoid such risks."

http://en.wikipedia.org/wiki/The_Human_Use_of_Human_Beings

Morality, Maximization, and Economic Behavior:

http://www1.american.edu/a

"On the surface, Bentham's doctrine bears a resemblance to the ancient Greek philosophy of hedonism, which also held that moral duty is fulfilled in the gratification of pleasure-seeking interests. But hedonism prescribes indi vidual actions without reference to the general happiness. Utilitarianism added to hedonism the ethical doctrine that human conduct should be directed toward maximizing the happiness of the greatest number of people. "The greatest happiness for the greatest number'' was the watch phrase of the utilitari-"ans - those who came to share in Bentham's philosophy. Among them were such personalities as Edwin Chadwick and the father-son combination of James and John Stuart Mill. This group champi oned legislation plus social and religious sanctions that punished individuals for harming others in the pursuit of their own happiness. Bentham defined his principle in the following fashion:

By the principle of utility is meant that principle which approves or disapproves of every action whatsoever, according to the tendency which it appears to have to augment or diminish the happiness of the party whose interest is in question... not only of every action of a private individual, but of every measure of government (Principles of Morals and Legislation, p. 17).

What is noteworthy about this declaration is the very minimal distinction Bentham made between morals and legislation. His self-conceived mission was to make the theory of morals and legislation scientific in the Newtonian sense. As Newton's revolutionary physics hinged on the universal principle of attraction (i.e., gravity), Bentham's theory of morals swung on the principle of utility. Newton's roundabout influence on the social sciences was felt in other ways as well. The nineteenth century was one with a passion for measure ment. In the social sciences, Bentham rode the crest of this new wave. If pleasure and pain could be measured in some objective sense, then every legislative act could be judged on welfare considerations. This achievement required a conception of the general interest, which Bentham readily under took to provide."

http://www.economictheorie

Lemuel: Hamid, Maximization as one would see it under many constraints?

Hamid: Yes, under many constraints (polytely), some are redundant (isotely), however in some way all are mutually dependent in a heterarchical/heirarchical

Lemuel: That would make we wonder what constraints utilitarians put into their equations to maximize utility. As always, when constraints are included, more than the usual, people react.

Hamid: Indeed they do, predictably in many cases, which is why it's important to design the constraints in ways which to some degree anticipate and minimize deviations from local-global complementarity.

Anticipatory Topoi:

Objective:

Develop a geometric/logic approach of some concepts related to anticipatory systems (viewed as transition systems)

Logical/geometric characterisation of an anticipatory operator on systems.

http://www-di.inf.puc-rio.br/~hermann/CASYS2001/ppframe.htm

"True, there is a fundamental difference between our ability to explore human behavior, or study bacteria or electrons. Bacteria don't get annoyed at you when you put them under a microscope. The moon will not sue you for landing a spacecraft on its face. Electrons are not subject to privacy laws. Yet, none of us want to submit to the invasive inquiry to which we subject our bacteria, our planets, or our electrons--aiming to know everything about us, all the time. In Bursts: The Hidden Pattern Behind Everything We Do, I try to convince you that this is about to change, with profound consequences.

Indeed, today just about everything we do leaves digital breadcrumbs in some database. Our e-mails are preserved in the log files of our e-mail provider; when, where, and what we shop for, our taste and our ability to pay for it is catalogued by our credit card provider; our face and fashion is remembered by countless surveillance cameras installed everywhere, from shopping malls to street corners. While we often choose not to think about it, the truth is that we are under multiple microscopes and our life, with minute resolution, can be pieced together from these mushrooming databases. And the measurements my research group and other scientists have performed on some of these datasets show something rather unexpected: they not only track our past, but they reveal our future as well. Indeed, by studying the communication and movement of millions of individuals through the electronic records they left behind, like mobile phone records, we have found a huge degree of predictability of individual behavior. The measurements told us that to those familiar with our past, our future acts should rarely be a surprise.

As we follow our impulses and daily priorities, we rarely realize that we submit ourselves to mathematically precise laws that describe our activities. The patterns are by no means new--they drove human behavior for centuries, dominating everything from wars to Einstein's correspondence. Our ability to collect these patterns is new, however, allowing us to extract the laws that govern some of our most intimate moments. And as we did that, we learned that everything we do, we do in bursts--brief periods of intensive activity followed by long periods of nothingness. These bursts are so essential to human nature that trying to avoid them is not only foolish, but futile as well."

http://www.huffingtonpost.com/albertlaszlo-barabasi/bursts-can-human-behavior_b_556030.html

"Overcoming Bias began in November ‘06 as a group blog on the general theme of how to move our beliefs closer to reality, in the face of our natural biases such as overconfidence and wishful thinking, and our bias to believe we have corrected for such biases, when we have done no such thing."

http://www.overcomingbias.com/about

Semantic Enhancement of Legal Information… Are We Up for the Challenge?

"SELF-GENERATING SYSTEMS

In his recent book Self-Modifying Systems in Biology and Cognitive Science (1991), George Kampis has outlined a new approach to the dynamics of complex systems. The key idea is that the Church-Turing thesis applies only to simple systems. Complex biological and psychological systems, Kampis proposes, must be modeled as nonprogrammable, self-referential systems called "component-systems."

In this chapter I will approach Kampis's component-systems with an appreciative but critical eye. And this critique will be followed by the construction of an alternative model of self-referential dynamics which I call "self-generating systems" theory. Self-generating systems were devised independently of component-systems, and the two classes of systems have their differences. But on a philosophical level, both formal notions are getting at the same essential idea. Both concepts are aimed at describing systems that, in some sense, construct themselves. As I will show in later chapters, this is an idea of the utmost importance to the study of complex psychological dynamics."

The principle remains central in modern physics and mathematics, being applied in the theory of relativity, quantum mechanics and quantum field theory, and a focus of modern mathematical investigation in Morse theory. This article deals primarily with the historical development of the idea; a treatment of the mathematical description and derivation can be found in the article on the action. The chief examples of the principle of stationary action are Maupertuis' principle and Hamilton's principle."

However, since one thing can have utility in more than one frame, intersecting content provides a basis for entanglement of utility functions. For example, if there are two hungry people A and B on a desert island and nothing to eat but one mango hanging from a tree, their individual utility functions both acquire the mango as an argument. Indeed, where teamwork has utility - and this is the rule in human affairs - A and B are acquired by each other's utility function (e.g., suppose that the only way A or B can reach the mango is to support or be supported by the other from below).

In a system dominated by competition and cooperation - a system like the real world - this cross-acquisition is a condition of interaction. But given a system with interacting elements, we have a systemic identity, i.e. a distributive self-transformation applying symmetrically to every element (frame) in the system, and this implies the existence of a mutual transformation relating different elements and ultimately rendering them commensurate after all. So "absolute moral relativism" fails in interactive real-world contexts. It's a logical absurdity."

"We first present the concept of duality appearing in order theory, i.e. the notions of dual isomorphism and of Galois connection. Then we describe two fundamental dualities, the duality extension/intention associated with a binary relation between two sets, and the duality between implicational systems and closure systems. Finally we present two «concrete» dualities occurring in social choice and in choice functions theories." Some order dualities in logic, games and choices

"Game Theory can be roughly divided into two broad areas: non-cooperative (or strategic) games and co-operative (or coalitional) games. The meaning of these terms are self evident, although John Nash claimed that one should be able to reduce all co-operative games into some non-cooperative form. This position is what is known as the "Nash Programme". Within the non-cooperative literature, a distinction can also be made between "normal" form games (static) and "extensive" form games (dynamic)."[94]

"It is argued that virtually all coalitional, strategic and extensive game formats as currently employed in the extant game-theoretic literature may be presented in a natural way as discrete nonfull or even-under a suitable choice of morphisms- as full subcategories of Chu (Poset 2)."On Game Formats and Chu Spaces

No comments:

Post a Comment