"Ontology describes the types of things that exist, and rules that govern them; a data model defines records about things, and is the basis for a database design. Not all the information in ontology may be needed (or can even be held) in a data model and there are a number of choices that need to be made. For example, some of the ontology may be held as reference data instead of as entity types [6].

Ontologies are promised to bright future. In this paper we propose that as ontologies are closely related to modern object-oriented software engineering, it is natural to adapt existing object-oriented software development methodologies for the task of ontology development. This is some part of similarity between descriptive ontologies and database schemas, conceptual data models in object oriented are good applicant for ontology modeling, however; the difference between constructs in object models and in current ontology proposals which are object structure, object identity, generalization hierarchy, defined constructs, views, and derivations. We can view ontology design as an extension of logical database design, which mean that the training object data software developers could be a promising approach. Ontology is the comparable of database schema but ontology represent a much richer information model than normal database schema, and also a richer information model compared to UML class/object model. Object modeling focus on identity and behavior is completely different from the relational model’s focus on information.

It is likely to adjust existing object oriented software development methodologies for the ontology development. The object model of a system consists of objects, identified from the text description and structural linkages between them corresponding to existing or established relationships. The ontologies provide metadata schemas, offering a controlled vocabulary of concepts. At the center of both object models and ontologies are objects within a given problem domain. The difference is that while the object model should contain explicitly shown structural dependencies between objects in a system, including their properties, relationships, events and processes, the ontologies are based on related terms only. On the other hand, the object model refers to the collections of concepts used to describe the generic characteristics of objects in object oriented languages. Because ontology is accepted as a formal, explicit specification of a shared conceptualization, Ontologies can be naturally linked with object models, which represent a system-oriented map of related objects easily.

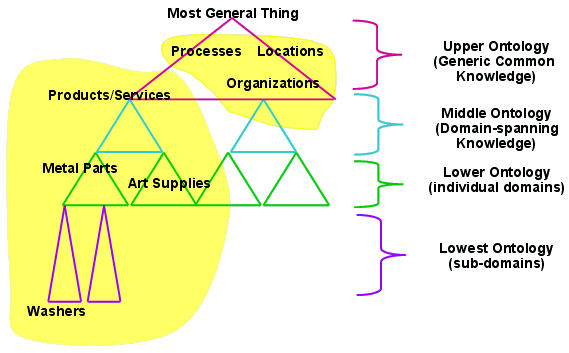

An ontology structure holds definitions of concepts, binary relationship between concepts and attributes. Relationships may be symmetric, transitive and have an inverse. Minimum and maximum cardinality constraints for relations and attributes may be specifies. Concepts and relationships can be arranged in two distinct generalization hierarchies [14]. Concepts, relationship types and attribute abstract from concrete objects or value and thus describe the schema (the ontology) on the other hand concrete objects populate the concepts, concrete values instantiate the attributes of these objects and concrete relationship instantiate relationships. Three types of relationship that may be used between classes: generalization, association, aggregation."

http://www.sersc.org/journals/IJSEIA/vol3_no1_2009/5.pdf

"We present a UML Profile for the description of service oriented applications.

The profile focuses on style-based design and reconfiguration aspects

at the architectural level. Moreover, it has a formal support in terms of an approach

called Architectural Design Rewriting, which enables formal analysis of

the UML specifications. We show how our prototypical implementation can be

used to analyse and verify properties of a service oriented application."

http://www.albertolluch.com/papers/adr.uml4soa.pdf

"Semantic Web Services, like conventional web services, are the server end of a client–server system for machine-to-machine interaction via the World Wide Web. Semantic services are a component of the semantic web because they use markup which makes data machine-readable in a detailed and sophisticated way (as compared with human-readable HTML which is usually not easily "understood" by computer programs).

The mainstream XML standards for interoperation of web services specify only syntactic interoperability, not the semantic meaning of messages. For example, Web Services Description Language (WSDL) can specify the operations available through a web service and the structure of data sent and received but cannot specify semantic meaning of the data or semantic constraints on the data. This requires programmers to reach specific agreements on the interaction of web services and makes automatic web service composition difficult.

...

Choreography is concerned with describing the external visible behavior of services, as a set of message exchanges optionally following a Message Exchange Pattern (MEP), from the functionality consumer point of view.

Orchestration deals with describing how a number of services, two or more, cooperate and communicate with the aim of achieving a common goal."

http://en.wikipedia.org/wiki/Semantic_Web_Services

An Analysis of Service Ontologies:

http://aisel.aisnet.org/cgi/viewcontent.cgi?article=1028&context=pajais

Information and Service Design Lecture Series

The Information and Service Design (ISD) Lecture Series brings together practitioners and researchers from various disciplines to report on their activities in the fields of information modeling, information delivery, service design, and the challenges of integrating these activities. The ISD Lecture Series started as Service Science, Management, and Engineering (SSME) Lecture Series in the fall 2006 and spring 2007 semesters, and the rebranded fall 2007 lecture series will continue to survey the service landscape, and explore issues of law, privacy, semantics, business models, and education.

http://dret.net/lectures/isd-fall07/

Fuzzy Logic as Interfacing Media for Constraint Propogation Based on Theories of Chu Space and Information Flow:

http://tinyurl.com/newdirectionsinroughsetsdatami

Chu Spaces: Towards New Foundations for Fuzzy Logic and Fuzzy Control, with Applications to Information Flow on the World Wide Web:

http://www.cs.utep.edu/vladik/1999/tr99-12.pdf

Chu Spaces - A New Approach to Diagnostic Information Fusion:

http://digitalcommons.utep.edu/cgi/viewcontent.cgi?article=1535&context=cs_techrep&sei-redir=1#search="chu+space+information+flow+games"

"Preference is a key area where analytic philosophy meets philosophical logic. I start with two related issues: reasons for preference, and changes in preference, first mentioned in von Wright’s book The Logic of Preference but not thoroughly explored there. I show how these two issues can be handled together in one dynamic logical framework, working with structured two-level models, and I investigate the resulting dynamics of reason-based preference in some detail. Next, I study the foundational issue of entanglement between preference and beliefs, and relate the resulting richer logics to belief revision theory and decision theory."

http://www.springerlink.com/content/aw4w76p772007g47/

COGNITION AS INTERACTION

"Many cognitive activities are irreducibly social, involving interaction between several different agents. We look at some examples of this in linguistic communication and games, and show how logical methods provide exact models for the relevant information flow and world change. Finally, we discuss possible connections in this arena between logico-computational approaches and experimental cognitive science."

http://www.illc.uva.nl/Publications/ResearchReports/PP-2005-10.text.pdf

http://staff.science.uva.nl/~johan/research.html

"Recent psychological research suggests that the individual human mind may

be effectively modeled as involving a group of interacting social actors: both various subselves representing coherent aspects of personality; and virtual actors embodying “internalizations of others.” Recent neuroscience research suggests the further hypothesis that these internal actors may in many cases be neurologically associated with collections of mirror neurons. Taking up this theme, we study the mathematical and conceptual structure of sets of inter-observing actors, noting that this structure is mathematically isomorphic to the structure of physical entities called “mirrorhouses.”

Mirrorhouses are naturally modeled in terms of abstract algebras such as quaternions and octonions (which also play a central role in physics), which leads to the conclusion that the presence within a single human mind of multiple inter-observing actors naturally gives rise to a mirrorhouse-type cognitive structure and hence to a quaternionic and octonionic algebraic structure as a significant aspect of human intelligence. Similar conclusions would apply to nonhuman intelligences such as AI’s, we suggest, so long as these intelligences included empathic social modeling (and/or other cognitive dynamics leading to the creation of simultaneously active subselves or other internal autonomous actors) as a significant component."

http://www.goertzel.org/dynapsyc/2007/mirrorself.pdf

Ontology Engineering, Universal Algebra, and Category Theory:

...

We merely take the set-theoretic intersection of the classes of the ontologies, and the set theoretic intersection of the relations between those classes. Of course, the foregoing is too naive. Two different ontologies may have two common classes X and Y , and in each ontology there may be a functional relation X / Y , but the two relations may be semantically different. Perhaps we could require that the relations carry the same name when we seek their intersection, but this brings us back to the very problem ontologies are intended to control — people use names inconsistently.

Instead, we take seriously the diagram A o C / B, developed with insightful intervention to encode the common parts of A and B and record that encoding via the two maps. Thus an f : X / Y will only appear in C when there are corresponding semantically equivalent functions in A and B, whatever they might be called. The requirement to check for semantic equivalence is unfortunate but unavoidable."

http://www.mta.ca/~rrosebru/articles/oeua.pdf

"Foundational issues

Basic ontological categories and relations

Formal comparison and evaluation of ontologies

Ontology, language, cognition

Ontology and natural-language semantics

Formal semantics of discourse and dialogue relations

Ontology and lexical resources

Ontology, visual perception and multimedia

Ontology-driven information systems

Methodologies for ontology development

Ontology-driven conceptual modeling

Ontology-driven information integration & retrieval

Application domains

Organizations, social reality, law

Business services and e-government

Industrial artifacts, manufacturing, engineering design

Formal models of interaction and cooperation"

http://www.loa-cnr.it/Research.html

"The Economics Group in the Electrical Engineering and Computer Science Department at Northwestern University studies the interplay between the algorithmic, economic, and social aspects of the Internet and related systems, and develops ways to facilitate users' interactions in these systems. This work draws upon a wide variety of techniques from theoretical and experimental computer science to traditional economic frameworks. By applying these techniques to economic and social systems in place today, we can shed light on interesting phenomena and, ideally, provide guidance for future developments of these systems. This interdisciplinary effort is undertaken jointly with the Managerial Economics and Decision Sciences Department in the Kellogg School of Management, The Center for Mathematical Studies in Economics and Management Science, and other institutions at Northwestern University and the greater Chicago area.

Specific topics under active research include: algorithmic mechanism design, applied mechanism design, bounded rationality, market equilibria, network protocol design, prediction markets, security, social networks, and wireless networking. They are described in more detail below.

--------------------------------------------------------------------------------

Algorithmic Mechanism Design. Mechanism design (in economics) studies how to design economic systems (a.k.a., mechanisms) that have good properties. Algorithm design (in computer science) studies how to design algorithms with good computational properties. Algorithmic mechanism design combines economic and algorithmic analyses, in gauging the performance of a mechanism; with optimization techniques for finding the mechanism that optimizes (or approximates) the desired objective. (Contact: Hartline, Immorlica)

Applied Mechanism Design. Internet services are fundamentally economic systems and the proper design of Internet services requires resolving the advice of mechanism design theory, which often formally only applies in ideological models, with practical needs and real markets and other settings. The interplay between theory and practice is fundamental for making the theoretical work relevant and developing guidelines for designing Internet systems, including auctions like eBay and Google's AdWords auction, Internet routing protocols, reputation systems, file sharing protocols, etc. For example the problem auctioning of advertisements on Internet search engines such as Yahoo!, Google, and Live Search, exemplifies many of the theoretical challenges in mechanism design as well as being directly relevant to a multi-billion dollar industry. Close collaboration with industry in this area is a focus. (Contact: Hartline, Immorlica)

Bounded Rationality. Traditional economic models assume that all parties understand the full consequences of their actions given the information they have, but often computational constraints limit their ability to make fully rational decisions. Properly modeling bounded rationality can help us better understand equilibrium in games, forecast testing, preference revelation, voting and new ways to model difficult concepts like unforeseen consequences in contracts and brand awareness. (Contact: Fortnow, Vohra)

Market Equilibria. The existence of equilibria has been the most important property for a "working" market or economy, and the most studied one in Economics. Existence of a desirable equilibrium is not enough; additionally, the market must converge naturally and quickly to this equilibrium. Of special interest here are decentralized algorithms, ones that do not rely on a central agent, that demonstrate how individual actions in a market may actually working in harmony to reach an equilibrium. (Contact: Zhou)

Network Protocol Design. Much of the literature on designing protocols assumes that all or most participants are honest and blindly follow instructions, whereas the rest may be adversarial. This is particularly apparent in distributed computing, where the aim is to achieve resilience against faults, and in cryptography, where the aim is to achieve security against a malicious adversary. A crucial question is whether such resilient protocols can also be made faithful -- that is, whether the (often unrealistic) assumption of honest participants can be weakened to an assumption of self-interested ones. Another question is whether one may be able to side-step impossibility results on the resilience of protocols by considering adversarial coalitions who do not act maliciously but rather in accordance with their own self-interests. (Contact: Gradwohl)

Prediction Markets. Prediction markets are securities based usually on a one-time binary event, such as whether Hillary Clinton will win the 2008 election. Market prices have been shown to give a more accurate prediction of the probability of an event happening than polls and experts. Several corporations also use prediction markets to predict sales and release dates of products. Our research considers theoretical models of these markets to understand why they seem to aggregate information so well, "market scoring rules" that allow prediction markets even with limited liquidity, the effect of market manipulation and ways to design markets and their presentation to maximize the usefulness of their predictive value. (Contact: Fortnow)

Security. With the increasing popularity of Internet and the penetration of cyber-social networks, the security of computation and communication systems has become a critical issue. The traditional approach to system security assumes unreasonably powerful attackers and often end up with intractability. An economic approach to system security models the attacker as a rational agent who can be thwarted if the cost of attack is high.(Contact: Zhou)

Social Networks. A social network is a collection of entities (e.g., people, web pages, cities), together with some meaningful links between them. The hyperlink structure of the world-wide-web, the graph of email communications at a company, the co-authorship graph of a scientific community, and the friendship graph on an instant messenger service are all examples of social networks. In recent years, explicitly represented social networks have become increasingly common online, and increasingly valuable to the Internet economy. As a result, researchers have unprecedented opportunities to develop testable theories of social networks pertaining to their formation and the adoption and diffusion of ideas/technologies within these networks. (Contact: Immorlica)

Wireless Networking. Recent advances in reconfigurable radios can potentially enable wireless applications to locate and exploit underutilized radio frequencies (a.k.a., spectrum) on the fly. This has motivated the consideration of dynamic policies for spectrum allocation, management, and sharing; a research initiative that spans the areas of wireless communications, networking, game theory, and mechanism design. (Contact: Berry, Honig)"

http://econ.eecs.northwestern.edu/

"Like economics, operations research, and industrial engineering, computer science deals with both the modeling of complex real world systems and the creation of new tools and methods that improve the systems' performance. However, in the former three, the notion of social costs or economic costs is more prominent in the models, and an improved system is more productive from a socioeconomic cost standpoint. In computer science, cost is usually associated with the "difficulty" or computational complexity, and the space (storage) or time (processing) complexity of an algorithm or a system. Mechanism design is one area of research that involves computer scientists where there are clear efforts to bridge the two notions of costs."

http://tinyurl.com/servicessciencefundamentalscha

Mechanism Design for "Free" but "No Free Disposal" Services: The Economics of Personalization Under Privacy Concerns

http://mansci.journal.informs.org/cgi/content/abstract/56/10/1766

Service Science

http://faculty.ucmerced.edu/pmaglio/2007/Lecture1.pdf

DECISION TECHOLOGY, MOBILE TECHNOLOGIES, AND SERVICE SCIENCE

Advanced Analytics Services for Managerial Decision Support

Fuzzy Logic And Soft Computing In Service And Management Support

Information Systems & Decision Technologies for Sustainable Development

Intelligent Decision Support for Logistics and Supply Chain Management

Mobile Value Services / Mobile Business / Mobile Cloud

NETWORK DSS: Mobile Social and Sensor Networks for Man-

Multicriteria Decision Support Systems

Service Science, Systems and Cloud Computing Services

Service Science, Management and Engineering (SSME)

Visual Analysis of Massive Data for Decision Support and Operational Management

http://www.hicss.hawaii.edu/hicss_45/45dt.htm

"Decision Engineering is a framework that unifies a number of best practices for organizational decision making. It is based on the recognition that, in many organizations, decision making could be improved if a more structured approach were used. Decision engineering seeks to overcome a decision making "complexity ceiling", which is characterized by a mismatch between the sophistication of organizational decision making practices and the complexity of situations in which those decisions must be made. As such, it seeks to solve some of the issues identified around complexity theory and organizations. In this sense, Decision Engineering represents a practical application of the field of complex systems, which helps organizations to navigate the complex systems in which they find themselves.

Despite the availability of advanced process, technical, and organizational decision making tools, decision engineering proponents believe that many organizations continue to make poor decisions. In response, decision engineering seeks to unify a number of decision making best practices, creating a shared discipline and language for decision making that crosses multiple industries, both public and private organizations, and that is used worldwide.[1]

To accomplish this ambitious goal, decision engineering applies an engineering approach, building on the insight that it is possible to design the decision itself using many principles previously used for designing more tangible objects like bridges and buildings. This insight was previously applied to the engineering of software—another kind of intangible engineered artifact—with significant benefits.[2]

As in previous engineering disciplines, the use of a visual design language representing decisions is emerging as an important element of decision engineering, since it provides an intuitive common language readily understood by all decision participants. Furthermore, a visual metaphor[3] improves the ability to reason about complex systems[4] as well as to enhance collaboration.

In addition to visual decision design, there are two other aspects of engineering disciplines that aid mass adoption. These are: 1) the creation of a shared language of design elements and 2) the use of a common methodology or process, as illustrated in the diagram above."

http://en.wikipedia.org/wiki/Decision_engineering

"Granular computing is gradually changing from a label to a new field of study. The driving forces, the major schools of thought, and the future research directions on granular computing are examined. A triarchic theory of granular computing is outlined. Granular computing is viewed as an interdisciplinary study of human-inspired computing, characterized by structured thinking, structured problem solving,

and structured information processing.

...

Zhang and Zhang [44] propose a quotient space theory of problem solving. The basic idea is to granulate a problem space by considering relationships between states. Similar states are grouped together at a higher level. This produces a hierarchical description and representation of a problem. Like other theories of hierarchical problem solving, quotient space theory searches a solution using multiple levels,

from abstract-level spaces to the ground-level space. Again, invariant properties are considered, including truth preservation downwards in the hierarchy and falsity preservation upwards in the hierarchy. The truth-preservation property is similar to the monotonicity property.

These hierarchical problem solving methods may be viewed as structured problem solving. Structured programming, characterized by top-down design and step-wise refinement based on multiple levels of details, is another example. The principles employed may serve as a methodological basis of granular computing.

http://www2.cs.uregina.ca/~yyao/PAPERS/ieee_grc_08.pdf

“Granular computing, in our view, attempts to extract the commonalities from existing fields to establish a set of generally applicable principles, to synthesize their results into an integrated whole, and to connect fragmentary studies in a

unified framework. Granular computing at philosophical level concerns structured thinking, and at the application level concerns structured problem solving. While structured thinking provides guidelines and leads naturally to structured problem solving, structured problem solving implements the philosophy of structured thinking.” (Yao, 2005, Perspectives of Granular Computing [25])

“We propose that Granular Computing is defined as a structured combination of algorithmic abstraction of data and non-algorithmic, empirical verification of the semantics of these abstractions. This definition is general in that it does

not specify the mechanism of algorithmic abstraction nor it elaborates on the techniques of experimental verification. Instead, it highlights the essence of combining computational and non-computational information processing.” (Bargiela

and Pedrycz, 2006, The roots of Granular Computing [2])

http://www2.cs.uregina.ca/~yyao/PAPERS/three_perspective.pdf

"What makes fabric computing different from grid computing is that there is a level of entropy where the state of the fabric system is dynamic. The rules that govern a CAS are simple. Elements can self-organize, yes are able to generate a large variety of outcomes and will grow organically from emergence. The fabric allows for the consumer to generate or evolve a large number of solutions from the basic building blocks provided by the fabric. If the elements of the fabric are disrupted, the fabric should maintain the continuity of service. This provides resiliency. In addition, if the tensile strength of the fabric is stressed by a particular work unit within a particular area of the fabric, the fabric must be able scale to distribute the load and adapt to provide elasticity and flexibility."

http://blogs.msdn.com/b/zen/archive/2011/02/28/architecture-considerations-around-fabric-computing.aspx

"Distributed shared-memory architectures

Each cell of a Computing Fabric must present the image of a single system, even though it can consist of many nodes, because this greatly eases programming and system management. SSI (Single System Image) is the reason that symmetric multiprocessors have become so popular among the many parallel processing architectures.

However, all processors in an SMP (symmetric multiprocessing) system, whether a two-way Pentium Pro desktop or a 64-processor Sun Ultra Enterprise 10000 server, share and have uniform access to centralized system memory and secondary storage, with costs rising rapidly as additional processors are added, each requiring symmetric access.

The largest SMPs have no more than 64 processors because of this, although that number could double within the next two years. By dropping the requirement of uniform access, CC-NUMA (Cache Coherent--Non-Uniform Memory Access) systems, such as the SGI/Cray Origin 2000, can distribute memory throughout a system rather than centralize memory as SMPs do, and they can still provide each processor with access to all the memory in the system, although now nonuniformly.

CC-NUMA is a type of distributed shared memory. Nonuniform access means that, theoretically, memory local to a processor can be addressed far faster than the memory of a remote processor. Reality, however, is not so clear-cut. An SGI Origin's worst-case latency of 800 nanoseconds is significantly better (shorter) than the several-thousand-nanosecond latency of most SMP systems. The net result is greater scalability for CC-NUMA, with current implementations, such as the Origin, reaching 128 processors. That number is expected to climb to 1,024 processors within two years. And CC-NUMA retains the single system image and single address space of an SMP system, easing programming and management.

Although clusters are beginning to offer a single point for system management, they don't support a true single system image and a single address space, as do CC-NUMA and SMP designs. Bottom line: CC-NUMA, as a distributed shared-memory architecture, enables an easily programmed single system image across multiple processors and is compatible with a modularly scalable interconnect, making it an ideal architecture for Computing Fabrics."

http://www.infomaniacs.com/Pubs/PCWeek_CompFabrics_Technologies.htm

"The main advantages of fabrics are that a massive concurrent processing combined with a huge, tightly-coupled address space makes it possible to solve huge computing problems (such as those presented by delivery of cloud computing services) and that they are both scalable and able to be dynamically reconfigured.[2]

Challenges include a non-linearly degrading performance curve, whereby adding resources does not linearly increase performance which is a common problem with parallel computing and maintaining security."

http://en.wikipedia.org/wiki/Fabric_computing

Service Science

Content Management System for Collaborative Knowledge Sharing

http://autproject.com/projectaut/

Duality in Knowledge Sharing:

http://www.iiia.csic.es/~marco/pubs/schorlemmer02a.pdf

No comments:

Post a Comment